Amit Mital kicked off TechEd Australia 2008 today with a keynote presentation on Microsoft’s view of how software and services will develop in the future, particularly in relation to their new Live Mesh offering. There is a good summary of his presentation on the TechEd New Zealand site, it seems they got an identical opening keynote. For someone who loves networks he sure doesn’t seem to like professional networks!

There was one flow of logic which struck me in his speech. Moore’s law is still holding true, and computer hardware is continuing to double in processing power every 18 months. This computer power is also appearing in more and more locations. But when was the last time your network doubled in speed? What about doubling in speed to each additional node? This rapid processing power increase has meant two things that are obvious even today:

- Computers are islands of computing power – There is no seamless transfer of data between your devices. You work on a file at work, email it home, download it at home, work on it and send it back.

- Deploying local machines is too hard – Each branch office needs a rack, servers, backup, redundency, configuration, support, licencing…

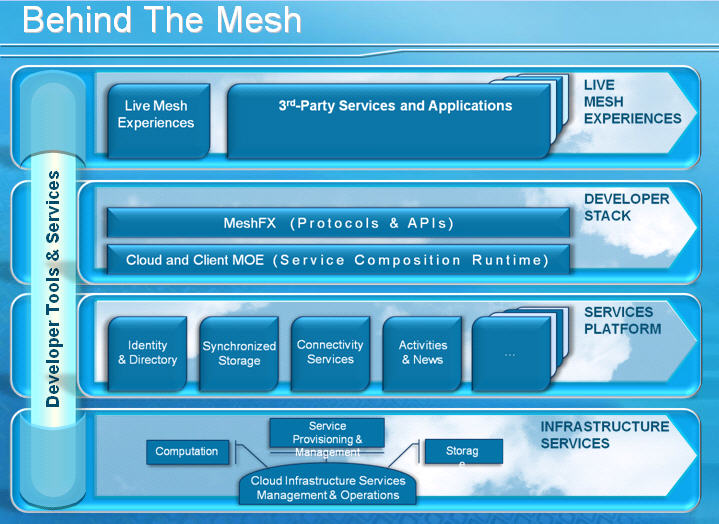

Microsoft’s solution at a high level is the Mesh stack, the structure of which can be seen in the slide shown here. The fundamentals are that local software is fast, hosted services are convenient, so lets tie them together with an API and we get the best of both worlds. The trick is getting the balance right, where does a local application end and the service begin? How do you split the business logic? How do you provide offline access and quick sign-on to new devices? Hmmm…

Microsoft’s solution at a high level is the Mesh stack, the structure of which can be seen in the slide shown here. The fundamentals are that local software is fast, hosted services are convenient, so lets tie them together with an API and we get the best of both worlds. The trick is getting the balance right, where does a local application end and the service begin? How do you split the business logic? How do you provide offline access and quick sign-on to new devices? Hmmm…

Microsoft’s current practical solution is to re-write most of its server packages to allow hosted delivery. Hosted Exchange is an obvious flagship for this. Google have taken a different approach. They believe that all you should need on your desktop is Chrome, essentially an all-purpose thin client rather than a thick client on a drip feed.

So who is right? Well I am betting things will converge on a middle of the road approach. Implmenting with current technology I would say that javascript, a web browser and some sort of XML interface would be the best way to go. A few things need to develop from here:

- API’s need to be standardised and built into the browser (or OS as these merge). Something like Javascript libraries, but compiled, lightening fast and highly reusable. Chrome is getting there.

- Data transfer needs to be better than XML. Think highly compressed, encrypted on the fly, but quickly decoded into a human readable format if necessary. Microsoft’s MeshFX is getting there because it has authentication and other services built in, but it needs to be open like SOAP.

So I guess the race is on! Google will take Javascript to it’s limits, Microsoft will try to blow us away with it’s feature set. When will they sit down and standardise on the next generation of javascript and data format?

Leave a Reply